32. Python Package - pandas

January 28, 2019

home

Contents

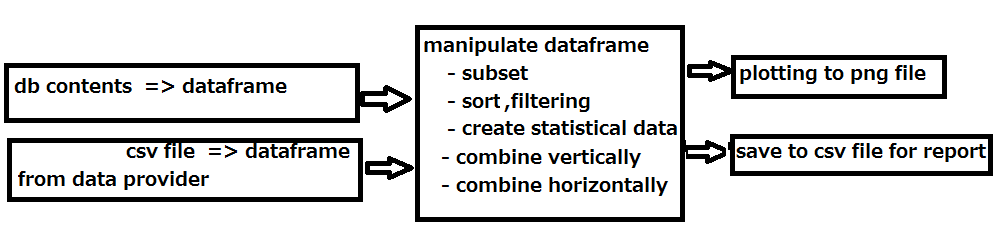

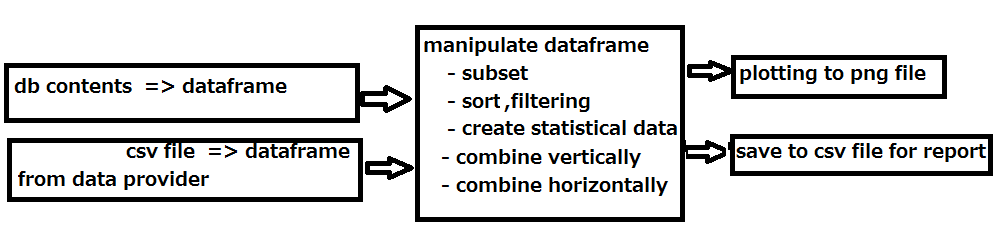

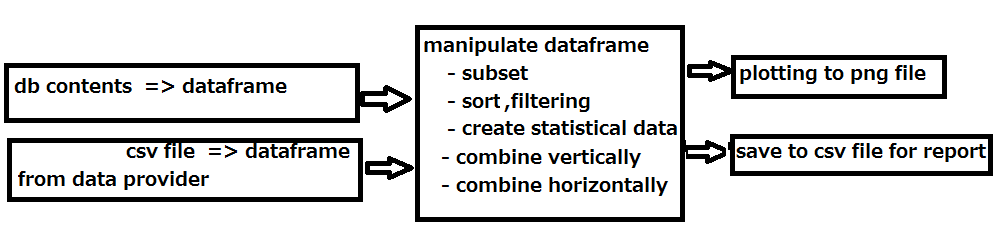

01. Streamline Data Analysis using DataFrame in package pandas

02. database content to DATA FRAME directly

description

- This topic is about getting data from a database, using MySql database.

- Other databases like Oracle, mssql,etc, or document databases for clouds, have the same way like MySql.

- In my previous topic 29, 05y, there is a lab for this.

- I did not use an IDE on my mac, I used website Repl.it to test.

- The data returned from the database server were many lines, not a pandas dataframe.

- In this lab,

- In my mac, Anacode3 was installed for python, pandas...

- Code visual studio was install for IDE.

- The steps to install the database driver with python for mac

- from Google, getting the package name for pip install. mysql-connector-python

- getting the package name for my python code mysql.connector

- open the terminal window,

- pip --version to make sure pip is installed.

- pip list to make sure mysql is not installed

- pip install mysql-connector-python

- note: the package is for all the operating system.

- pip list to see it in the list.

- To verify the success of the installation, using one line of python code import mysql.connector

- To uninstall, enter pip uninstall mysql-connector-python

print('---------- mysql3.py --------')

import pandas as pd

import mysql.connector

mydb = mysql.connector.connect(

host="XXXX.winhost.com",

user="xxxxxxxx",

password="xxx2xxx",

database="mysql_56314_peter"

)

df = pd.read_sql("SELECT * FROM dogs", con=mydb)

print(df)

'''

dog_name dog_color

0 Tairo brown

1 Emi white

2 Joy black

3 Lucky yellow

'''

print('---------- end test 5 --------')

comments:

- If a sql query is used for creating a dataframe from a database server.

- The query can be used to define the data.

- No need to use some pandas functions, like sort, merge.. for that purposes.

- Also, it is more direct.

03. creating dataframes for reporting

03.1 prepare statistical data

- In addition to plotting, a dataframe can be used for reporting.

- One example is to prepare statistical information.

- Then, send it out as report

print('------- dataframe ==> csv file for reporting ---')

print('--- 1. prepare a dataframe -------')

import pandas as pd

df =pd.DataFrame({ # python dictionary

'name': ['Happy', 'Lucky', 'Wiwi'], # key-value pairs

'weight' : [35.50, 65.33, 42.50],

'length' : [2.5, 4.3, 3.2],

'color': ['brown', 'white', 'black']

})

print(df)

# The values for color are not measurable,

# The statistical data will not be generated for it.

print('--- 2. create a report -------')

info = df.describe()

print(info)

print('type of info = ' + str(type(info))) # dataframe

'''

weight length

count 3.000000 3.000000

mean 47.776667 3.333333

std 15.599347 0.907377

min 35.500000 2.500000

25% 39.000000 2.850000

50% 42.500000 3.200000

75% 53.915000 3.750000

max 65.330000 4.300000

note-1: The index are created using method describe.

note-2: Method describe is defined in a standard way.

'''

print('--- 3. save the df to csv file ----')

info.to_csv('dogs.csv', header = True, index = True)

#the file will be in the parent of the current folder for my mac.

print('--------- end of test ---------')

04. Storing python objects

description

- Python objects like dataframe, list, dictionary can be stored in files

with file extension pickle.

- Pickling means to store for later uses,

like a cucumber that is preserved in brine, vinegar, or the like.

- They can be passed around in a unprotected manners.

- The pickle file format is in binary.

- If the python file is under the working folder, the pickle file will be saved under the same folder.

import pickle # built-in module

print('--- 1. pickle write ------------')

my_dict = {1: 'Lucky', 2: 'Happy', 3: 'Joy'}

pickle_out = open('my_dict.pickle', 'wb') #write output file, byte

pickle.dump(my_dict, pickle_out) #from, to

pickle_out.close()

print('--- 2. pickle read ------------')

pickle_in = open('my_dict.pickle', 'rb')

dict2 = pickle.load(pickle_in)

pickle_in.close()

print(dict2) #{1: 'Lucky', 2: 'Happy', 3: 'Joy'}

print('type of dict2 = ' + str(type(dict2))) #dict

print('--- end test -----')

05. Creating DataFrames from CSV files

description

- In topic 30,section 02, from web

- In topic 30,section 03, from local device

- I am using mac and code visual studio.

- In code visual studio IDE, there is a window pane for files and folders explore.

- When you open a folder, that is the working directory.

- You can also use python os module's methods getting csv file from different locations.

- os.path.join(..,..)

- os.getcwd()

- os.path.abspath(...)

06. Numpy, Series

types in a dataframe

- Many data types in a dataframe are converted into types defined in numpy.

- The purposes are for efficiency, better interfaces with other languages like C, Java.

print('--- 1, create df from a python dictionary ----')

import pandas as pd

dogs = {

'name': ['Lucky', 'Happy', 'Joy'], # column names

'age': [8, 6, 3], # column 1

'weight':[41.5, 32.7, 64.4], # column 2

}

df = pd.DataFrame(dogs)

print(df)

c01 = df['age'][1]

print('c01 = ' + str(c01)) # 6

print('c01 type is ' + str(type(c01))) # class 'numpy.int64'

c11 = df['weight'][1]

print(c11) # 32.7

print('c11 type is ' + str(type(c11))) # class 'numpy.float64'

numpy objects

- A numpy object is an array.

- It can be multi-dimensional.

- It is a classic array.

- You can create it or slice from a dataframe

- The following code to use it for create a dataframe.

import numpy as np

import pandas as pd

data = np.array([['', 'Col1', 'Col2','name'], #python lists

[0, '11npa','12npa', 'A'], #python lists

[1, '21npa','22npa', 'B']]) #python lists

df = pd.DataFrame(data = data[1:,1:],

index=data[1:,0],

columns= data[0,1:])

print(df)

print('df.index = ' + str(df.index))

print('df.columns = ' +str(df.columns))

print('df.values = ' + str(df.values))

Get a column or a row in SERIES from a dataframe

- The return data type is Series.

- It is defined in pandas.

- A series object has columns and rows.

- It looks like a one-column dataframe.

# continue to use the dataframe for dogs data

print('--- 2. get a column ---------------')

c1 = df['weight']

print(' c1 = ' + str(c1))

print('c1 type = ' + str(type(c1))) # pandas.core.series.Series

'''

0 41.5

1 32.7

2 64.4

'''

print('--- 3. get a row ---------------')

r1 = df.iloc[1]

print(r1)

print(' r1 type ' + str(type(r1))) # pandas.core.series.Series

''' note: column names are on the left

name Happy

age 6

weight 32.7

'''

Create Series Objects

- First, create two Series objects from python lists.

- Then, create a dictionary by using them.

- Finally, prepare a dataframe from it.

import pandas as pd

c1 = pd.Series([22,55,33]) # Series for one column values

c2 = pd.Series([99,77,11]) # Series for one column values

print('c1 type is ' + str(type(c1))) # class pandas.core.series.Series

dict = {'col1': c1,'col2': c2} # two column names

print('dict type is ' + str(type(dict))) #class 'dict'

df = pd.DataFrame(dict) # class dataframe

print(df)

07. DataFrame.loc for both rows and columns

Based on my study from Pandas API - pandas.dataframe.loc

- Pandas API home page has comprehensive examples.

- df.loc[['viper', 'sidewinder'], ['shield']]

- example 1: Access a group of rows and columns by label(s).

- Labels are a index name or column names.

- ['viper', 'sidewinder'] is the list for rows.

- ['shield'] is the list for columns

- df.loc[[False, False,True]]

- example 2:Access a group of rows and columns by a boolean array.

- 3 rows df

- The 3rd row selected.

08.Columns first or Rows first

08.1 Columns First

print('------- dataframe column test , Jan 16, 2019 -----')

import pandas as pd

print('--- 1. create df ----')

import pandas as pd

dogs = {

'name': ['Lucky', 'Happy', 'Joy'],

'age': [8, 6, 3],

'weight':[41.5, 32.7, 64.4],

}

df = pd.DataFrame(dogs)

print(df)

'''

name age weight

0 Lucky 8 41.5

1 Happy 6 32.7

2 Joy 3 64.4

'''

print('--- 2. rearrange columns ----')

cols2 = ['age', 'weight', 'name'] #python list

df2 = df[cols2]

print(df2)

print('--- 3. drop a column -------')

df3 = df.drop('weight', 1) # axis number, 1 for column

print(df3)

print('--- 4. add a column ---------')

dogs_color = ['white', 'brown', 'black'] #python list, no need for Series

df['color'] = dogs_color # color is the new column name

print(df)

print('--- 5. sort ---------')

df5 = df.sort_values(by=['age']) # no need from index for row

print(df5)

print('--- 6. access one column ---------------------')

column_weight = df['weight']

print('column weight = ' + str(column_weight))

'''

0 41.5

1 32.7

2 64.4

'''

print('--- 7. access a cell --------------------------')

weight4Joy = df['weight'][2] # index is not set for row

print('weight4Joy = ' + str(weight4Joy)) #64.4

'''

notes:

1. There are TWO ways to access a cell in a dataframe.

2. This is one way to get all the column first. Then select a row.

'''

print('---------- end of test 5 ------------------------')

08.2 Rows FIRST

numeric indices

- In the first demo is to use numeric indices to access a dataframe.

- Method iloc is used. [...] is for method arguments, not (...)

- In this demo, they are automatically generated. The index type is RangeIndex.

- Using this way, I'll not treat them as explicit and use loc to access them.

import pandas as pd

import pandas as pd

dogs = {

'name': ['Lucky', 'Happy', 'Joy'],

'age': [8, 6, 3],

'weight':[41.5, 32.7, 64.4],

}

df = pd.DataFrame(dogs)

print(df)

print(df.index) #RangeIndex(start=0, stop=3, step=1)

'''

name age weight

0 Lucky 8 41.5

1 Happy 6 32.7

2 Joy 3 64.4

'''

d1 = df.iloc[1] # 1 row

print('d1 = ' + str(d1))

print('d1 type is ' + str(type(d1))) #class 'pandas.core.series.Series'

'''

name Happy

age 6

weight 32.7

'''

d01 = df.iloc[0:2] # 0, 1 rows, row 2 is excluded.

print('d01 = ' + str(d01))

print('d01 type is ' + str(type(d01))) #dataframe

'''

d01 = name age weight

0 Lucky 8 41.5

1 Happy 6 32.7

'''

explicit indices

- In the first demo is to use explicit indices to access a dataframe.

- Method loc is used.

import pandas as pd

dogs = {

'name': ['Lucky', 'Happy', 'Joy'],

'age': [8, 6, 3],

'weight':[41.5, 32.7, 64.4],

}

df = pd.DataFrame(dogs)

df.set_index('name', inplace=True)

df.sort_values(by='name', ascending=True, inplace=True)

print(df)

'''

age weight

name

Happy 6 32.7

Joy 3 64.4

Lucky 8 41.5

'''

d1 = df.loc['Joy'] # 1 row

print('d1 = ' + str(d1))

print('d1 type is ' + str(type(d1))) # Series

'''

age 3.0

weight 64.4

Name: Joy, dtype: float64

'''

d01 = df.loc['Happy':'Joy'] # 0, 1 rows, row 1 is included.

print('d01 = ' + str(d01))

print('d01 type is ' + str(type(d01))) # dataframe

'''

age weight

name

Happy 6 32.7

Joy 3 64.4

'''

access a cell

- This is the second way to access a cell

- Using loc to get a row first.

- Then, set the column.

Joy_weight = df.loc['Joy']['weight']

print('Joy_weight = ' + str(Joy_weight)) # 64.5

09. Multilevel indices and grouping

my comments, Jan, 2019

- Good for reporting

1-2, data for this demo, as categorical data

- data: dog weight measurements

- based on dog name, month

- one dog, one month, one weight measurement.

3. create mutilevel indices, grouping

- df2.set_index(['name', 'month'])

- The result dataframe is a report.

4. Grouping

- When you create multilevel indices, data are grouped into two level

- level-1: name

- level-2: month

- There is no need under the context of containments - like counties-states, years-months.

- You use statistical methods to get related statistical data in a report dataframe.

5. making the 2nd index to a column

- using method unstack

- The result dataframe is also a report.

# --- 1. prepare a csv file ----

name,month,weight

Joy, 1, 45.9

Happy, 3, 12.3

Happy, 1, 11.1

Joy, 3, 42.2

Happy, 2, 10.5

Joy, 2, 43.1

# ---2. create a df, sort

import pandas as pd

import pandas as pd

df = pd.read_csv('dogs_weight.csv')

df2 = df.sort_values(by=['name','month'])

print(df2)

'''

name month weight

2 Happy 1 11.1

4 Happy 2 10.5

1 Happy 3 12.3

0 Joy 1 45.9

5 Joy 2 43.1

3 Joy 3 42.2

'''

#---- 3. create mutilevel indices

df3 = df2.set_index(['name', 'month'])

print(df3)

'''

weight

name month

Happy 1 11.1

2 10.5

3 12.3

Joy 1 45.9

2 43.1

3 42.2

'''

#--- 4. grouping -------------------------

df4 = df3.groupby('name')

s_report = df4.mean()

print(s_report)

''' weight

name

Happy 11.30

Joy 43.73

'''

#--- 5. having the 2nd index to be a column

df5 =df3.unstack()

print(df5)

'''

month 1 2 3

name

Happy 11.1 10.5 12.3

Joy 45.9 43.1 42.2

'''

10. pivoting-drill down

- This demo is about resturants tip data.

- Two restaurants - Pandas,Amber

- For lunch and dinner of 2 days are recorded

------------- csv data ------------------------

name,store,day,time,tip

Joe,Pandas,1,lunch,250.50

Joe,Pandas,1,dinner,420.50

Joe,Pandas,2,lunch,110.50

Joe,Pandas,2,dinner,120.50

Nancy,Pandas,1,lunch,150.50

Nancy,Pandas,1,dinner,320.50

Nancy,Pandas,2,lunch,410.50

Nancy,Pandas,2,dinner,220.50

Michael,Amber,1,lunch,310.00 note: one data for Muchael is not there

Michael,Amber,2,lunch,110.50

Michael,Amber,2,dinner,315.00

Carey,Amber,1,lunch,110.00

Carey,Amber,1,dinner,315.00

Carey,Amber,2,lunch,150.50

Carey,Amber,2,dinner, 215.00 note: space is ok

---------------- code -----------------------------------

print('------ pivot table, drilldown -----')

import pandas as pd

print('--- 1. prepare df --------')

df = pd.read_csv('tips.csv')

print(df.head())

'''

name store day time tip

0 Joe Pandas 1 lunch 250.5

1 Joe Pandas 1 dinner 420.5

2 Joe Pandas 2 lunch 110.5

3 Joe Pandas 2 dinner 120.5

4 Nancy Pandas 1 lunch 150.5

'''

print('---2. create pivot for drill down ---')

df2 = pd.pivot_table(df,'tip',['store','name'],['day','time'])

print(df2)

'''

day 1 2

time dinner lunch dinner lunch

store name

Amber Carey 315.0 110.0 215.0 150.5

Michael NaN 310.0 315.0 110.5

Pandas Joe 420.5 250.5 120.5 110.5

Nancy 320.5 150.5 220.5 410.5

'''

print('---- end of test -------')

11. Combining dataframes

11.1 Vertically

- append, for loop

- concat, for known elements

import pandas as pd

df1 = pd.DataFrame([[11,12],[21,22]], columns = ['A', 'B'])

df2 = pd.DataFrame([[31,32],[41,42]], columns = ['A', 'B'])

df12 = df1.append(df2, ignore_index = True)

print('df12 = ' + str(df12))

'''

A B

0 11 12

1 21 22

2 31 32

3 41 42

'''

df12x =pd.concat([df1, df2], ignore_index = True)

print(' df12x = ' + str(df12x)) # the same

11.2 Horizontally

- like sql inner join

print('------------------- test merge -----------------------')

import pandas as pd

print('------ workers ------------------------------')

workers = pd.DataFrame([{'name': 'Joe','emp_id': 12,'rate': 15.50},

{'name': 'Emi','emp_id': 35,'rate': 20.10}])

print(workers)

'''

emp_id name rate

0 12 Joe 15.5

1 35 Emi 20.1

'''

print('----- works ------------------')

works = pd.DataFrame([{'id': 12,'hours': 24},

{'id': 35,'hours': 41}])

print(works)

'''

hours id

0 24 12

1 41 35

'''

print('----- merge ---------------------')

m = pd.merge(workers, works, left_on='emp_id', right_on='id').drop('id',axis=1)

print(m)

'''

emp_id name rate hours

0 12 Joe 15.5 24

1 35 Emi 20.1 41

'''

print('------------ end -- test 3----------------------------')

12. Data Cleanup

- Handling missing data is based on the scenarios

- Two are presented as below.

# --- senario-1 --------------------------------------

# --- mydogs.csv-----

name,month,weight

Joy, 1, 45.9

Happy, 3

Happy, 1, 11.1

Joy, 3

Happy, 2, 10.5

Joy, 2, 43.1

# --- python code ----

import pandas as pd

print('--- data with missing values ----')

dogs= pd.read_csv('mydogs.csv')

print(dogs)

'''

name month weight

0 Joy 1 45.9

1 Happy 3 NaN

2 Happy 1 11.1

3 Joy 3 NaN

4 Happy 2 10.5

5 Joy 2 43.1

''''

print('----- solution: fill 0 -----------')

dogs['weight'].fillna(0, inplace=True)

print(dogs)

'''

name month weight

0 Joy 1 45.9

1 Happy 3 0.0

2 Happy 1 11.1

3 Joy 3 0.0

4 Happy 2 10.5

5 Joy 2 43.1

'''

# --- senario-2 ---------------------------------------------

# --- my_expenses.csv-----

item, amount Weekday

misc, 50.10 Mon

foods, 120.99 Wed

haircut, 20.55 Thurs

dinner, 99.99 Sun

, 15.00 Sun

misc, 100.50 Sat

# ---python code -----

import pandas as pd

print('--- data with missing values -------------')

expenses= pd.read_csv('my_expenses.csv')

print(expenses)

'''

item amount Weekday

0 misc 50.10 Mon

1 foods 120.99 Wed

2 haircut 20.55 Thurs

3 dinner 99.99 Sun

4 NaN 15.00 Sun

5 misc 100.50 Sat

'''

print('----- solution: fill them with reasonable values -----------')

expenses['item'].fillna(value='misc', inplace= True)

print(expenses)

'''

item amount Weekday

0 misc 50.10 Mon

1 foods 120.99 Wed

2 haircut 20.55 Thurs

3 dinner 99.99 Sun

4 misc 15.00 Sun

5 misc 100.50 Sat

'''

print('------- end of test -------------')

13. List Comprehension

- A list comprehension is a syntactical construct in python language.

- It creates a new list from the existing list.

print('--- 1. prepare a dataframe')

import pandas as pd

data = {'product': ['A', 'B', 'C', 'D'],

'id': [1, 2, 3, 4],

'hourly_pay': [35.50, 16.50, 17.50, 120.50]}

df = pd.DataFrame(data)

print(df)

'''

product id hourly_pay

0 A 1 35.5

1 B 2 16.5

2 C 3 17.5

3 D 4 120.5

'''

print('--- 2. Using list comprehension and a function to convert a list ---')

def myfunc(p):

if p < 100:

return p * 1.1

else:

return p

new_hourly_pay = [myfunc(p) for p in df['hourly_pay']]

print('--- 3. add a new column ------------')

df['new_hourly_pay'] = new_hourly_pay

print(df)

'''

product id hourly_pay new_hourly_pay

0 A 1 35.5 39.05

1 B 2 16.5 18.15

2 C 3 17.5 19.25

3 D 4 120.5 120.50

'''

print('--- or 4. update ------------------')

#df['hourly_pay'] = new_hourly_pay

#print(df)

14. method iterrows

- iterate each row in a dataframe

import pandas as pd

print('----- 1. create df -------------')

df = pd.DataFrame({

'type': ['A', 'B', 'C'],

'price': [11.11, 22.22, 33.33],

'quantity': [2, 4, 3]

})

df.set_index('type', inplace=True)

print(df)

'''

price quantity

type

A 11.11 2

B 22.22 4

C 33.33 3

'''

print('---- 2. Using method iterrows to iterate each row ---')

total_amts = []

for index, row in df.iterrows():

amt = row['price'] * row['quantity']

total_amts.append(amt)

df['total_amt'] = total_amts # add a column

print(df)

'''

price quantity total_amt

type

A 11.11 2 22.22

B 22.22 4 88.88

C 33.33 3 99.99

'''

15. code visual studio for python

- In this topic, some features are discussed.

- set breakpoints and run

- In the editor, a python file is open.

- On the left end of a code, click it.

- A red dot is seen, a break point is set.

- Click the debug button.

- The codes will be executed before the breakpoint.

- You do not have examine any before that. Focus the current spots.

- examine data

- With the debug window open on the left.

- In the variables window pane,

- click local window pane

- Hover the mouse over a dataframe content, you can see it.

- You do not have to use print method to see a dataframe.

- examine collection data type

- First, set the break points, and run.

- On the right lower window, click ...

- then, click debug window

- The window with one line is open,

- You can enter print(type(my_new_data)) to examine the type.

- It is more comfortable to know what you get - dataframe, Series, Numpy array...